|

||||||

|

Deep Learning

deep learning architectures

-

feed-forward networks

-

auto-encoders (output want to recover input image, middle layer smaller - use results of middle layer for compression)

-

recurrent neural networks (RNNs) (backward feeding at run time as part of input into middle layer)

-

convolutional neural networks (CNNs)

topics in Deep Learning

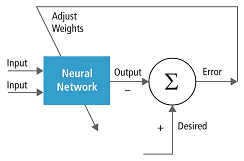

- Neural Networks: perceptrons, activation functions, and basic neural networks. recall from discussion of NN - you train with input and desired outputs and use error to adjust weights in some kind of "learning algorithm".

- Logistic Classifier: this is a NN that the output layer has nodes that sum up to 1.0 --- meaning 100% probability. So each output node represents probability of that "thing it respresents" occurring.

- Optimization: techniques for optimizing classifier performance, including validation and test sets, gradient descent, momentum, and learning rates. You can control this in the setup of our "deep learning" neural network.

- Regularization: techniques, including dropout, to avoid overfitting a network to the training data.

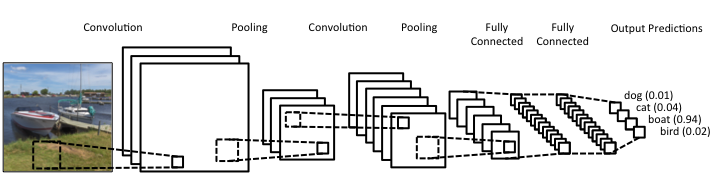

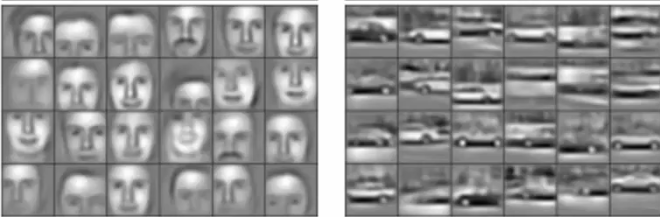

- Convolutional Neural Networks:building blocks of convolutional neural networks, including filters, stride, and pooling. Image Processing:

- Input layer = present as input every pixel in the image (or sub-image). Could be rgb or grey or lidar or ?

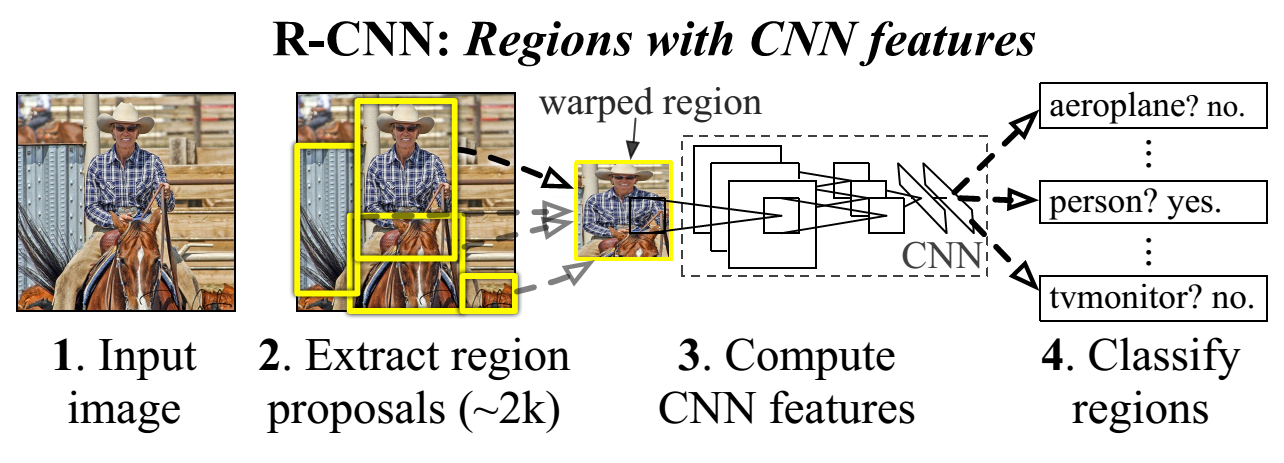

Object Recongition with Deep Learning

typically little image processing --here example where don't pass entire image but, subimages (regions)